Model Context Protocol

Model Context Protocol (MCP) is a function calling standard and framework developed by anthropic and published in November 2024.

Let’s reiterate what we know about function calling.

Function Calling

Function calling allows the LLM to do tasks it could not normally achieve. This is done by giving the model some tools that allow it to interact with the outside world to, for instance, gather information or control other applications. To use function calling, we first have to

- write a system prompt that

- describes the tools the LLM has access to.

- prompts the LLM to structure the output in a certain way (usually JSON format) if it wants to use a tool.

We already did these things in the course. The basic function calling workflow then looks something like this:

- The LLM is presented with a user query.

- It starts reasoning internally about wether to use a tool for the task. If so, it

- generates a function call. As mentioned, this takes the form of generating structured output.

- The LLM output is then parsed for tool calls. If tool calls are present, and syntactically correct, then

- The tool calls are executed.

- The result of the tool call is returned to the LLM, usually indicated by a key word (e.g. Observation:).

- When the LLM has gathered enough information or thinks it does not need any more tool calls, a final answer is generated, if applicable.

A unified standard

This is all well, as long as the tools are relatively easy to implement, like executing python functions. Additionally, you may want to give the LLM the option to:

- Read your files

- Check your calendar

- Search the web

- Access your databases

- Use your company’s internal tools

Every time developers want to give an AI access to external data or tools, they have to build custom, one-off integrations. It’s like building a different key for every single door.

MCP creates a standardized way for AI models to connect to external resources. Think of it as:

- Universal translator: One standard “language” for AI-to-tool communication

- Plugin system: Like browser extensions, but for AI assistants

- Modular approach: Write a tool once, use it with any MCP-compatible AI

So, first and foremost, MCP is an interface standard that defines how external tools can be integrated into large language models (LLMs). It provides a set of protocols and APIs that allow LLMs to interact with these tools seamlessly. This includes defining the inputs and outputs for each interaction, as well as the mechanisms for error handling and feedback loops.

In addition to a standard definition, anthropic also released an implementation of MCP in a number of languages, including Python. It also hosts a repository on GitHub with examples and documentation that you can use as a starting point for building your own integrations. There is also a large and growing collection of pre-built connectors to third-party services like databases, APIs, and other tools. That means developers don’t have to start from scratch every time they want to add new functionality to their LLMs.

Have a look!

- Find the MCP and MCP server repository on GitHub

- Browse through the list of server implementations, find one (or many) that you find interesting.

But what is an MCP server anyway? And how does MCP work exactly?

Core concepts

The following is more or less taken from the official website.

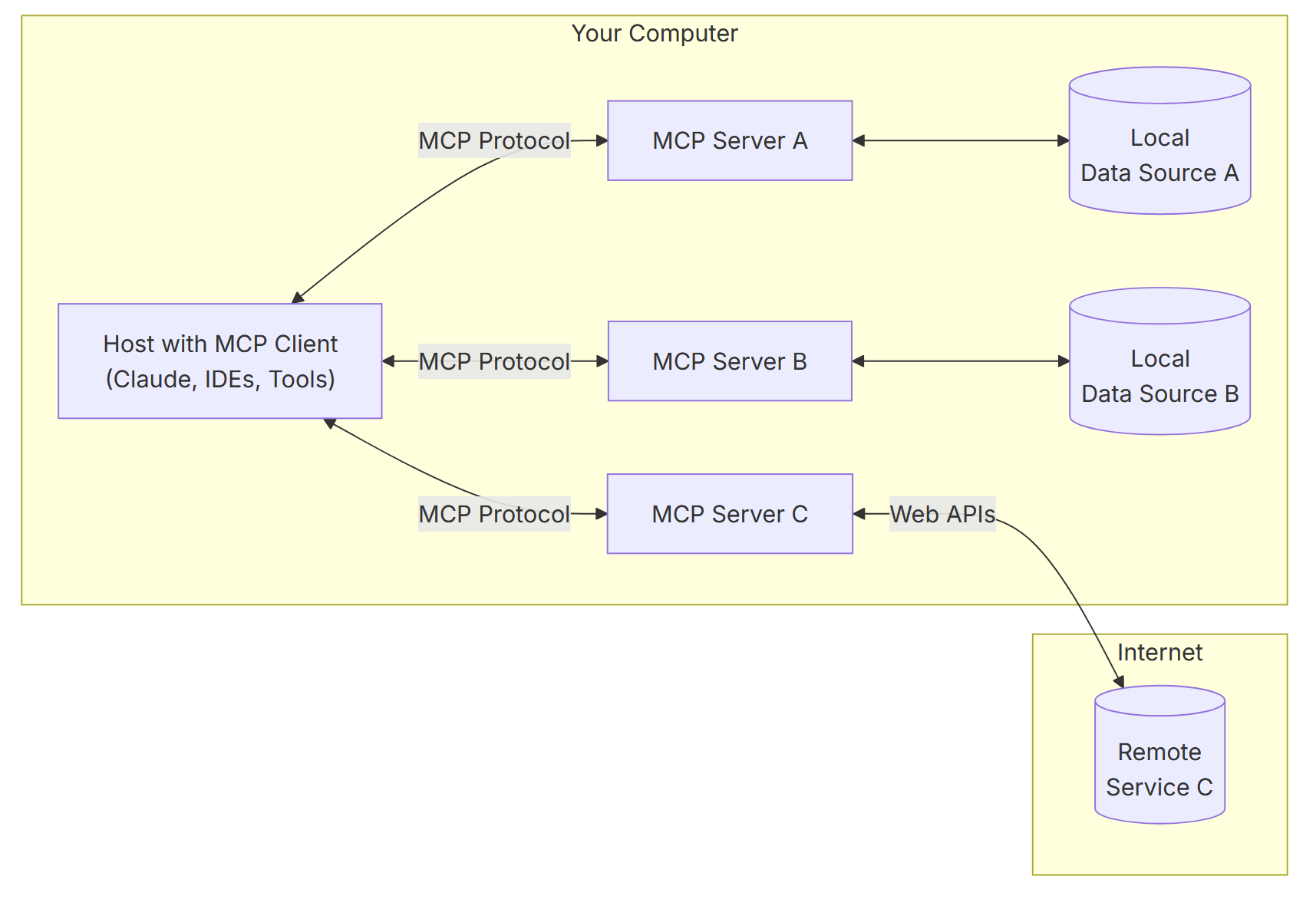

At its core, MCP follows a client-server architecture where a host application can connect to multiple servers:

- MCP Hosts: Programs like Claude Desktop, IDEs, or AI tools that want to access data through MCP

- MCP Clients: Protocol clients that maintain 1:1 connections with servers

- MCP Servers: Lightweight programs that each expose specific capabilities through the standardized Model Context Protocol

- Local Data Sources: Your computer’s files, databases, and services that MCP servers can securely access

- Remote Services: External systems available over the internet (e.g., through APIs) that MCP servers can connect to

The servers come in three main flavours:

- Resources: File-like data that can be read by clients (like API responses or file contents)

- Tools: Functions that can be called by the LLM (with user approval)

- Prompts: Pre-written templates that help users accomplish specific tasks

As you have seen in the repository, there are lots of pre-built servers available.

MCP in action

Now it’s time to implement a simple example using MCP! There are several ways to get started:

- Follow the official quickstart tutorial in the

python-sdkrepo - Combine the quick-start guides for client and server developers.

- Find some other example implementation on stackoverflow, reddit, personal blogs, medium ect.

- Ask an LLM to generate a simple script or notebook to set up MCP for you!

A comment on option 4 seems to be in order (this is a course on using generative AI, after all): It’s ok to use an LLM to create code, especially if it’s not your area of expertise. However, always double-check the generated code and make sure that you understand what each line does before running it! This has not only security implications but also practical ones. For example, you may have to explain your code to others. In this course, for instance, you will have to explain the code in your final project presentation to pass. Another word of warning against using LLM-generated code: It often works out of the box, but sometimes it does not. In this case, debugging LLM generated code often takes longer than writing it yourself from scratch!

Now it’s your turn!

- Set up a simple MCP system in a notebook using a local LLM.

- If you use LLM-generated code as a starting point, find code snippets the LLM generates, but does not use or is superfluous in some other manner.

- Have fun using one of the fancy servers from the repo

- Upload your notebook to Moodle as usual!

Discussion

The final question to discuss is the following:

Is MCP worth spending my (valuable!) time on, or should I rather focus on other things?

MCP is intended to be a standard for function calling in LLMs. It’s not clear yet if others will adopt this. And what worth is a standard if it doesn’t get adopted by the big players? Likewise, it is a framework for function calling, but htere are other frameworks out there that also facilitate that, e.g. LangChain, Llamaindex, Haystack, Smolagents etc. Is MCP the next big thing or just another framework?

Nobody knows what the future holds. A good way of making an educated guess is to ask these three questions:

- What do you think. Is it a good idea?

- What do others think? Especially the big players are of interest here.

- Where does the money come from? Who pays for this stuff? How does the company make money?

Try to find out.

- Take some time googling around on the internet and see what you can find about MCP, its adoption by big players, who is behind it etc.

- Discuss in the group! What do you think? Is this a good idea or not? Why?